Compare ATEF Text Based on the Works of Jon M. Quigley

It’s a Great Day

LinkedIn connections have asked me for my perspective on test and verification (ATEF vs Jon M. Quigley testing principles).

I like what I do. I have spent years (decades, actually) studying and applying product development approaches. This includes time as a development engineer, test engineer, and the manager of a test department that encompassed a range of domains, environmental and EMC, component integration HW/SW, virtual systems integration testing (HIL), and live fire systems integration – on the road again.

I enjoy the exchanges with others on these topics, from the one-to-ones to the SPAMcast chats with Tom Cagley. I feel some satisfaction from having these conversations. These are opportunities to explore the experiences of others and what I think and know. This includes virtual chats and queries coming in from LinkedIn. On the other hand, I do this to make a living, keep a roof over my head, help my kid pay off student loans, and one day, be prepared to handle the fact that I may not be able to work and provide for my family.

https://www.linkedin.com/in/robert-fey-78178b154/

Alignment with Quigley’s Verification Principles

The ATEF (Automotive Testing Efficiency Framework) text presents a structured, capability-focused approach to improving testing efficiency in the automotive domain. This aligns with Jon M. Quigley’s advocacy for frameworks that provide clarity, structure, and measurable improvement in testing, as seen in his discussions about ATEF itself and his broader work on automated testing and simulation. [1] [2] [3]

No matter the approach or philosophical approach taken when it comes to testing, verifying, and validating the product, the operational environment is key- the organization’s culture around testing.

- Leadership Commitment and Vision

- Clear Goals and Expectations

- Open Communication and Collaboration

- Continuous Learning and Adaptation

- Early Integration of QA

- Alignment on Quality Definition

- Cross-Functional Training and Teamwork

- Feedback and Continuous Improvement

- Data-Driven Decision Making

Strengths

Focus on Verification Capabilities over Methods:

The text emphasizes evaluating key efficiency drivers, such as automation, traceability, reuse, maintainability, and CI/CD readiness, rather than prescribing specific test methods. This aligns with Quigley’s view that effective testing improvement stems from understanding and optimizing the underlying capabilities and processes rather than merely layering on new tools or techniques.

Neutral and Tool-Independent Evaluation:

ATEF is a neutral framework, not tied to any tool or process model. Quigley often stresses the importance of tool independence and the need for frameworks that can be adapted to various organizational contexts, which this approach supports.

Maturity Levels and Benchmarking:

Using maturity levels for each capability, enabling benchmarking and structured improvement, is consistent with Quigley’s emphasis on metrics and measurable progress in testing efficiency. He frequently highlights the value of using objective metrics to guide improvement efforts and to facilitate evidence-based conversations about testing performance.

Holistic View of Testing Efficiency:

The text recognizes that testing efficiency is about more than just reducing effort, connecting it to speed, stability, and confidence in software evolution. This reflects Quigley’s holistic perspective on the value of testing. He notes that efficiency improvements should support better product outcomes, not just lower costs or faster execution.

Areas for Improvement

Explicit Integration of Simulation:

While ATEF mentions automation and efficiency, it does not explicitly highlight the role of simulation, which Quigley identifies as a key enabler for early defect detection, requirements clarification, and cost savings, especially before hardware is available. Integrating simulation as a core capability or explicitly referencing its benefits could strengthen the framework’s alignment with Quigley’s recommendations.

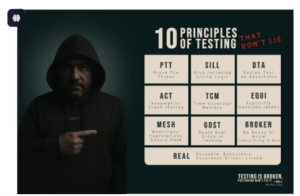

Testing Theory and Philosophy:

Quigley advocates for a clear testing theory or philosophy to unify test plans and provide rationale for test architecture (e.g., risk-based, compliance, combinatorial, and destructive testing). The ATEF text could benefit from referencing or encouraging the development of such a guiding philosophy within its framework, ensuring that capability assessments are grounded in a coherent testing strategy.

Hardware Considerations in Automation:

Quigley emphasizes that automated testing is a hardware and software proposition requiring significant investment and skill requirements. The ATEF text focuses on automation but does not address the challenges or considerations related to hardware in automated testing environments, which are highly relevant in the automotive sector.

Fault Detection and Reporting:

The text could be more explicit about how ATEF supports robust fault detection, objective pass/fail criteria, and reporting areas Quigley identifies as essential for effective automated testing and for ensuring that efficiency gains do not compromise defect containment.

Summary Table: ATEF vs. Quigley’s Key Themes

It is easier to see this in the form of a table

| Aspect | ATEF Text Coverage | Quigley’s Emphasis | Alignment/Gap |

| Capability-driven improvement | Strong | Strong | Aligned |

| Tool/process neutrality | Strong | Strong | Aligned |

| Metrics and maturity assessment | Strong | Strong | Aligned |

| Simulation as a capability | Weak | Strong | Partial (suggest improvement) |

| Testing philosophy/theory | Weak | Strong | Partial (suggest improvement) |

| Hardware in automation | Weak | Strong | Partial (suggest improvement) |

| Fault detection/reporting | Moderate | Strong | Partial (suggest improvement) |

Something’s Missing

I would add another dimension not explicitly stated in either model, but is demonstrated in the non-verification books I have written, especially the one with my brother Shawn P. Quigley on team learning. The book can be found at that link, but it is also on Amazon. I write about developing a common mental model for the approach to testing within the test group or department. This is not to be a static model, but one that grows as the team learns and matures in their craft.

Conclusion

The ATEF text aligns well with Jon M. Quigley’s principles, particularly in its emphasis on structured, measurable, and capability-driven testing improvement. It stands out for its neutrality, focus on key efficient drivers, and use of maturity models. However, it could be strengthened by explicitly incorporating simulation, encouraging a unified testing philosophy, addressing hardware considerations in automation, and detailing approaches to fault detection and reporting—all of which are highlighted in Quigley’s body of work.

For more information, contact us:

The Value Transformation LLC store.

Follow us on social media at:

Amazon Author Central https://www.amazon.com/-/e/B002A56N5E

Follow us on LinkedIn: https://www.linkedin.com/in/jonmquigley/

https://www.linkedin.com/company/value-transformation-llc

[1] https://www.linkedin.com/posts/jonmquigley_sae-internationals-dictionary-of-testing-activity-7273400126801391617-qCmM/

[2] https://www.linkedin.com/pulse/automated-testing-simulation-jon-m-quigley-ms-pmp-ctfl/

[3] https://www.linkedin.com/posts/synopsys-automotive-testing_atef-activity-7272909318570549248-K8fw/