Test, Inspection, Evaluation Master Plan Organized

TIEMPO – Test, Inspection, Evaluation Master Plan Organized

by Jon M. Quigley and Kim Robertson

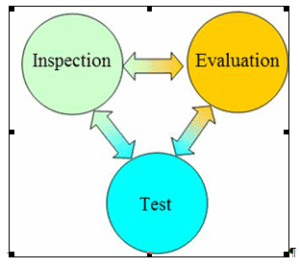

PRODUCT DEVELOPMENT TEST, INSPECTION AND EVALUATION

Ensuring product quality is not accomplished solely through testing and verification activities. Testing is but a fraction of the techniques that are at an organization’s disposal to improve their development quality. Good planning of the product incarnations; that is, a phased and incremental delivery of the feature content, makes it possible for an organization to employ test, inspections, and evaluation as tools for competitive advantage. To really improve (and prove product quality), a more comprehensive approach is required.

The Test, Inspection & Evaluation Master Plan Organized adds an extra method to the Test and Evaluation Master Plan [TEMP—specified in IEEE 1220 and MIL-STD-499B (Draft)] to support product quality. TIEMPO expands the concept of a Test and Evaluation Master Plan by focusing on staged deliveries with each product/process release a superset of the previous release. We can consider these iterations our development baselines closely linked to our configuration management activities. Each package is well defined; likewise the test, inspection and evaluation demands are well defined for all iterations. Ultimately, the planned product releases are coordinated with evaluation methods for each delivery. Under TIEMPO inspections are:

- Iterative software package contents

- Iterative hardware packages

- Software reviews

- Design reviews

- Mechanical

- Embedded product

- Design Failure Mode and Effects Analysis (DFMEA)

- Process Failure Mode and Effects Analysis (PFMEA)

- Schematic reviews

- Software Requirements Specification reviews

- Systems Requirements Reviews

- Functional Requirements Reviews

- Prototype part inspections

- Production line process (designed)

- Production line reviews

- Project documentation

- Schedule plans

- Budget plans

- Risk management plans

A. Philosophy of the Master Plan

At its core, TIEMPO assists in coordinating our product functional growth. Each package has a defined set of contents, to which our entire battery of quality safeguarding techniques will be deployed. This approach—defined builds of moderate size and constant critique—has a very agile character, allowing for readily available reviews of the product. In addition, TIEMPO reduces risk by developing superset releases wherein each subset remains relatively untouched. Most defects will reside in the new portion (the previously developed part of the product or process is now a subset and proven defect-free). Should the previous iteration contain unresolved defects, we will have had the opportunity between these iterations to correct these defects. Frequent critical reviews are utilized to guide design and to find faults. The frequent testing facilitates quality growth and reliability growth and gives us data from which we can assess the product readiness level (should we launch the product).

B. Benefits of the Master Plan

Experience suggests the following benefits arise:

- Well planned functional growth in iterative software and hardware packages

- Ability to prepare for test (known build content), inspection and evaluation activities based upon clearly-identified packages

- Linking test, inspection and evaluations to design iterations (eliminate testing or inspecting items that are not there)

- Reduced risk

- Identification of all activities to safeguard the quality—even before material availability and testing can take place

- Ease of stakeholder assessment, including customer access for review of product evolution and appraisal activities

Experience also indicates that at least one 15% of the time associated with downstream trouble shooting is wasted in unsuccessful searches for data simply due to lack of meaningful information associations with the developmental process baselines. The TIEMPO approach eliminates this waste ferreting out issues earlier in the process and allowing more dollars for up front product refinement.

C. An overview of one approach:

How each of these pieces fit together is shown below. TIEMPO need not be a restricted only phase-oriented product development, but any incremental and iterative approach will be the beneficiary of a constant critique including entrepreneurial activities.

D. System Definition:

The TIEMPO document and processes begin with the system definition. Linked to the configuration management plan it will describe the iterative product development baselines that build up to our final product function entirety. In other words, we describe each of our incarnations of the products in each package delivery. We are essentially describing our function growth as we move from little content to the final product. Each package will have incremental feature content and bug fixes from the previous iteration. By defining this up front, we are able to specifically link the testing, inspection and evaluation activities to not only make an iteration but specific attributes of that iteration and capture it in an associative data map in our CM system. In the example of testing, we know the specific test cases we will conduct by mapping the product instantiation with the specifications and ultimately to test cases. We do this through our configuration management activities. We end up with a planned road map of the product development that our team can follow. Of course, as things change we will again update the TIEMPO document through our configuration management actions.

E. Test (Verification):

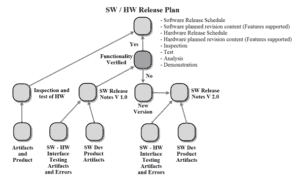

Test or verification consists of those activities typically associated with determining whether the product meets the specification or original design criterion. If an incremental and iterative approach is applied, prototype parts are constantly compared against specifications. The parts will not be made from entirely production processes but will increase in level of production content as the project progresses and we approach our production intent. Though prototype parts may not represent production in terms of durability, they should represent some reasonable facsimile of the shape and feature content congruent with the final product. We use these parts to reduce the risk by not jumping from idea to final product without learning along the way. We should learn something from this testing to use to weigh the future quality of the resultant product. It is obvious how testing fits into TIEMPO. However, there are some non-obvious opportunities to apply TIEMPO. We could use inspection as a form of test plan. Did we get the testing scope correct? We can also use this inspection technique on our test cases, analyzing if we will indeed stress the product in a way valuable to our organization and project, not to mention that we can inspect software long before it is even executable. The feedback from this inspection process will allow us to refine the testing scope, test cases, or any proposed non-specification or exploratory-based testing. The testing relationships in a typical HW/SW release plan are shown below.

F. Reliability testing

In the case of reliability testing, we assess the probable quality behavior of the product or process over some duration. Finding failures in the field is a costly proposition, with returned parts often costing the producer to absorb from profit five to ten times the sales price, not to mention the intangible cost of customer dissatisfaction. For reliability testing, small sample sizes are used when a baseline exists (and we combine Weibull and Bayesian analytical techniques) or larger sample sizes without a baseline. Physical models are used for accelerated testing in order to compute probable product life. Inferior models will hamper our progress, especially when a baseline does not exist. Our approach is specified in the TIEMPO document; along with what specific packages (hardware / software) used to perform this activity. Thus our development and reliability testing are linked together via our configuration management work

G. So, when do we start testing?

Many may believe that it is not possible to test a product without some measure of hardware or software samples available. It is possible to test if we have developed simulators to allow us to explore the product possibilities in advance of the material or software. This requires accurate models as well as simulation capability. To ensure accurate models we will run tests between our model results and real world results to determine the gap and make necessary adjustments to the models. We may even use these tools to develop our requirements if sophisticated enough. These activities reduce the risk and cost of the end design because we have already performed some evaluation of the design proposal. As prototype parts become available, testing on these parts is done alone or in concert with our simulators. If we have staged or planned our function packages delivered via TIEMPO, we will test an incrementally improving product.

H. Types of tests during development

When we get into the heavy lifting of the product or service testing, we have a variety of methods in our arsenal. At this stage we are trying to uncover any product maladies we which not to be impacted by, nor our customer. We will use approaches such as:

- Compliance testing (testing to specifications)

- Extreme testing (what does it take to destroy and how does the product fail)

- Multi-stimuli or combinatorial testing

- Stochastic (randomized exploratory)

I. Inspections

Reviews are analogous to inspections. The goal of reviews is to find problems in our effort as early as we can. There may be plenty of assumptions that are not documented or voiced in the creation of these products. The act of reviewing can ferret out the erroneous deleterious ones allowing us to adjust. We can employ a variety of review techniques on our project and product such as:

- Concept reviews

- Product requirements reviews

- Specification reviews

- System design reviews

- Software design reviews

- Hardware design reviews

- Bill Of Materials

- Project and Product Pricing

- Test plan reviews

- Test case reviews

- Prototype inspections

- Technical and Users Manuals

- Failure Mode Effects Analysis (see immediately below)

Design Failure Mode Effects Analysis (DFMEA) and the Process Failure Mode Effects Analysis (PFMEA) employed by the automotive industry can be applied to any industry. These tools represent a formal and well-structured review of the product and the production processes. The method forces to consider the failure mechanism and the impact. If we have a historical record we can take advantage of that record or even previous FMEA exercises. There are two advantages; the first of which is the prioritization of severity. The severity is a calculated number known as the Risk Priority Number (RPN) and is the result of the product of:

- Severity (ranked 1-10)

- Probability (ranked 1-10)

- Detectability (ranked 1-10)

The larger the resulting RPN, the higher the severity, we then prioritize our addressing of these concerns first. The second advantage fits with the testing portion of the TIEMPO. The FMEA approach links testing to those identified areas of risk as well. We may alter our approach or we may choose to explore via testing as an assessment to our prediction. For example, let’s say we have a design that we believe may allow water intrusion into a critical area. We may then elect to perform some sort of moisture exposure test to see if we are right about this event and the subsequent failure we predict.

J. Evaluation (Validation)

Evaluation can be associated with Validation. With these activities we are determining suitability of our product to meet the customers need. We are using the product (likely a prototype) as our customer would. If the prototype part is of sufficient durability and risk or severity due to malfunction low, we may supply some of our closest customers with the product for evaluation. Their feedback is used to guide the remaining design elements. This facilitates our analysis of the proposed end product. There are other ways to employ evaluation. Consider the supplier or manufacturer of a product to a customer for subsequent resale. We may perform a run-at-rate assessment on the manufacturing line to measure probable production performance under nearly realistic conditions. Now, we are evaluating the line under the stresses that would be there during production. We may use the pieces produced during the run-at-rate assessment to perform our testing. This approach is a reasonable, since the resulting parts will be off the manufacturing line and built under comparable stresses to those in full production. In fact, we may choose to use these parts in the mentioned early customer evaluations.

K. Inspection caveats

By definition, an inspection is a form of quality containment, which means trapping potential escapes of defective products or processes. The function of inspection, then, is to capture substandard material and to stimulate meaningful remediation. Inspection for such items as specification fiascoes prevents the defects from rippling through impending development activities where the correction is much more costly. The containment consists of updating the specification and incrementing the revision number while capturing a “lesson learned.” Reviews take time, attention to detail and an analytical assessment of whatever is under critique, anything less will likely result in a waste of time and resources.

L. Product Development Phases

Usually there are industry specific product development processes. One hypothetical, generic model for such a launch process might look like the following:

• CONCEPT

• SYSTEM LEVEL

• PRELIMINARY DESIGN

• CRITICAL DESIGN

• TEST READINESS

• PRODUCTION READINESS

• PRODUCT LAUNCH

For the process outlined above, we could expect to see a test (T), inspection (I) and evaluation (E) per phase as indicated in the chart below:

The design aspects will apply to process design just as much as to product or service design.

M. Conclusion

There is no one silver bullet. Test, inspection and evaluation are instrumental to the successful launch of a new product. This is also true for a major modification of a previously released product or service. We need not limit these to the product but can also employ the techniques on a new process or even a developing a service. Both testing and inspection provide for verification and validation that the product and the process are functioning as desired. We learn as we progress through the development. If all goes well, we can expect a successful launch. The automotive approach has been modified and used in the food and drug industry as the hazard analysis and critical control point system. Critical control points are often inspections for temperature, cleanliness, and other industry-specific requirements.

Below is an outline for a TIEMPO document[1]:

1. Systems Introduction

1.1. Mission Description

1.2. System Threat Assessment –

1.3. Min. Acceptable Operational Performance Requirements

1.4. System Description

1.5. Testing Objectives

1.6 Inspection Objectives

1.7 Evaluation Objectives

1.8. Critical Technical Parameters

2. Integrated Test, Inspection Evaluation Program Summary

2.1. Inspection Areas (documents, code, models, material)

2.2. Inspection Schedule

2.3. Integrated Test Program Schedule

2.4. Management

3. Developmental Test and Evaluation Outline

3.1. Simulation

3.2. Developmental Test and Evaluation Overview

3.3. Component Test and Evaluation

3.4. Subsystem Test and Evaluation

3.5. Developmental Test and Evaluation to Dates

3.6. Future Developmental Test and Evaluation

3.7. Live Use Test and Evaluation

4. Inspection

4.1. Inspection of models (model characteristics, model vs. real world)

4.2. Inspection Material (Physical) parts

4.3. Prototype Inspections

4.4. Post Test Inspections

4.5. Inspection Philosophy

4.6. Inspection Documentation

4.7. Inspections Software

4.8. Design Reviews

5. Operational Test an Evaluation Outline

5.1. Operational Test and Evaluation Overview

5.2. Operational Test and Evaluation to Date

5.3. Features / Function delivery

5.4. Future Operational Test and Evaluation

6. Test and Evaluation Resource Summary

6.1. Test Articles

6.2. Test Sites and Instrumentation

6.3. Test Support

6.4. Inspection (Requirements and Design Documentation) resource requirements

6.5. Inspection (source code) resource requirements

6.6. Inspection prototype resource requirements

6.7. Threat Systems / Simulators

6.8. Test Targets and Expendables

6.9. Operational Force Test Support

6.10. Simulations, Models and Test beds

6.11. Special Requirements

6.12. T&E Funding Requirements

6.13. Manpower/Personnel Training

[1] Pries, Kim H. , Quigley, Jon M. Total Quality Management for Project Management, Boca Raton, FL CRC Press, 2013