Verification, Validation, and Digital Twins in Software‑Defined Vehicles (SDVs)

Why SDVs Demand Stronger Verification and Validation

T he shift to software‑defined vehicles (SDVs) is transforming automotive engineering—and multiplying its risks. As ECUs consolidate into centralized compute platforms and features increasingly rely on AI, machine learning, and over‑the‑air (OTA) updates, safety, cybersecurity, and reliability take center stage.

he shift to software‑defined vehicles (SDVs) is transforming automotive engineering—and multiplying its risks. As ECUs consolidate into centralized compute platforms and features increasingly rely on AI, machine learning, and over‑the‑air (OTA) updates, safety, cybersecurity, and reliability take center stage.

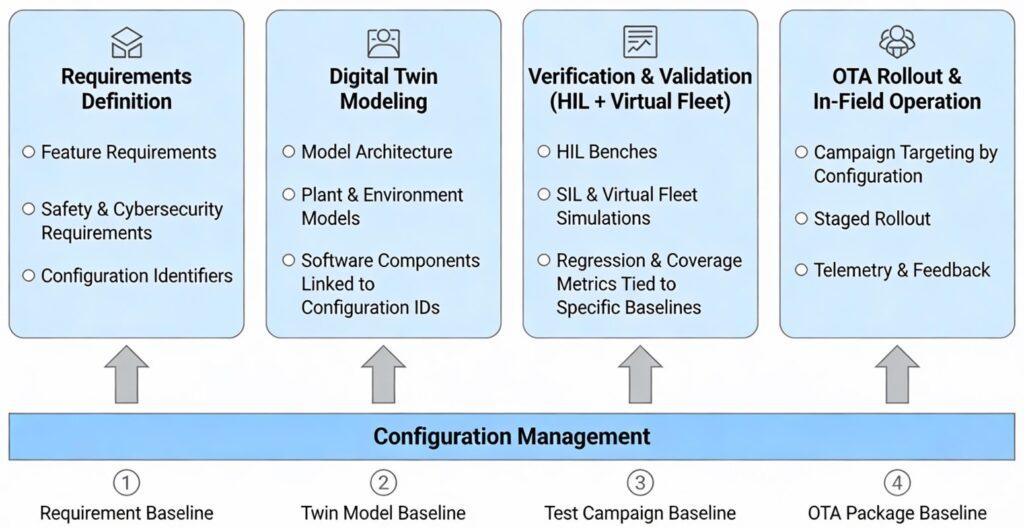

This complexity makes verification and validation (V&V) more critical than ever. Regulators are tightening expectations through standards like ISO 26262 for functional safety and UN R155 for cybersecurity management. Validation is no longer a scheduled milestone—it’s an ongoing discipline integrated into continuous development pipelines. The connection between SDV, Configuration Management, and Verification is especially important when combined with the ability to quickly launch our product to customers (we picked those words specifically to create the vision of a missile or other projectile) via remote over-the-air downloads.

In this new paradigm, digital twins in SDVs are emerging as an essential enabler—uniting modeling, simulation, and test automation to manage risk at scale, all connected, from initial ideas and early simulations as the system morphs into a producible, maintainable incarnation.

SDVs need configuration-aware twins

Software-defined vehicles, digital twins, and configuration management form a tightly coupled ecosystem that enables large-scale SDV development and OTA operations to be feasible and safe. Without explicit configuration control, even the best SDV digital twin strategy quickly turns into an untrustworthy simulation and risky field updates.

SDVs need configuration-aware twins

SDVs run on layered software stacks across many ECUs, networks, and feature variants, which means “the vehicle” is really a moving target of configurations over time. A digital twin for an SDV must therefore represent not just behavior, but the precise software, hardware, and data configuration that behavior depends on.

-

SDV digital twins typically track ECU types, software versions, feature flags, security policies, and calibration sets, often as a “software twin” or in-vehicle state model in the cloud.

-

This configuration-centric twin becomes the reference for simulation, diagnostics, OTA targeting, and safety argumentation, rather than a generic, idealized model.

Digital twins as configuration baselines

Digital twins as configuration baselines

A mature SDV twin acts as a live configuration baseline for each vehicle and virtual instance. Configuration management ensures that every twin instance corresponds to a specific, identifiable build and variant, not a vague “latest” design.

-

Platforms like Sibros’ “software twin” keep a historical record of all software and configuration changes across the vehicle lifecycle, effectively embedding CM into the twin.

-

Case studies show that digital twins maintain complete data representations for design, development, configuration, validation, and maintenance, turning the twin into a configuration-controlled artifact rather than a disposable model.

CM enables trustworthy SDV simulations and virtual fleets

Digital twins only add verification value if their configuration closely matches reality to produce credible evidence. In SDVs, configuration management connects model structure, parameters, and software versions to the exact vehicle builds and feature sets they represent. Unvetted models are theoretical and pose a risk to all future buildings. Simulations provide a base, footers if you will, upon which the product is developed. Built on soft sand or marsh, you can easily predict the results. Models are vetted through engineering testing and verification to understand parameters and magnitudes that impact the proposed product.

-

Automotive digital twin platforms stress that you “cannot physically test every variant,” so you need a configuration-accurate virtual fleet to cover OTA campaigns and variant combinations.

-

CM ensures that each virtual vehicle in that fleet reflects a valid combination of ECUs, software, and options, preventing misleading results from impossible or outdated configurations.

OTA campaigns: twins plus CM to avoid SDV chaos

In the OTA context, SDV digital twins and configuration management work together to de-risk updates at scale. Vendors now run “dry‑run” OTA campaigns in cloud-based digital twins of real vehicle configurations to predict issues before touching customer cars.

-

OTA platforms use twin-backed configuration inventories to decide which vehicles are eligible for a given update, verify dependencies, and simulate the impact on specific configurations.

-

Configuration management guarantees that the software package tested in the twin is the same one later delivered to matching physical vehicles, preserving traceability from simulation to deployment.

SDV DevOps: CM as the spine between code, twin, and car

SDV DevOps: CM as the spine between code, twin, and car

SDV DevOps architectures now explicitly include digital twin services and configuration registries as first-class components. Configuration management becomes the spine that connects code repositories, build pipelines, digital twins, and in-vehicle states.

-

Reference SDV toolchains describe in-vehicle digital twin services synchronized with cloud twins, backed by service registries and state descriptions tied to specific software and hardware identities.

-

As new software is integrated and verified in the twin, CM propagates configuration IDs and baselines through CI/CD, so each test result and field behavior can be traced back to exact SDV stack versions.

Verification: Proving the Design Before Hardware

Verification confirms that the design implementation meets requirements before physical prototypes even exist. For SDVs, this means using model‑based design and digital twins to virtually explore thousands of operational scenarios.

-

Building virtual ECUs and systems for early logic validation.

-

Conducting software‑in‑the‑loop (SIL) and hardware‑in‑the‑loop (HIL) tests using real‑time simulation frameworks.

-

Applying AI‑driven scenario generation to uncover edge cases that traditional rule‑based datasets might miss.

Modern engineering organizations extend the traditional V‑model into continuous verification. Automated checks run in CI/CD pipelines, ensuring that every code commit triggers regression assessments and compliance checks across critical safety functions. Such early and repeated testing drastically reduces integration risk—a key lesson from leaders in both the automotive and aerospace industries.

Validation: Proving Behavior in Real and “Near‑Real” Worlds

While verification tests the design, validation ensures the system performs correctly under realistic conditions. For SDVs, that means integrating lab, bench, and fleet testing with virtual validation environments powered by digital twins.

Leading validation strategies now include:

-

Driver‑in‑the‑loop (DiL) simulators and OTA shadow mode testing for pre‑deployment evaluation.

-

HIL setups that replicate real sensor data streams, network latencies, and vehicle dynamics.

-

AI‑driven scenario generation to replay millions of edge cases related to perception, decision, and action layers.

Bridging the “model–reality gap” is foundational. Engineers refine their digital twin models using fleet data, diagnostics, and designed experiments, allowing virtual tests to accurately represent physical performance. This balance of simulated and empirical evidence elevates the confidence of each software release.

Practical Impleme ntation Patterns for SDV V&V

ntation Patterns for SDV V&V

Verification and validation in SDVs thrive on structured, repeatable approaches. Automotive systems expert Jon M. Quigley outlines five complementary testing environments that together form a balanced validation ecosystem:

-

Lab testing for component‑level assurance.

-

Bench testing for integrated subsystem checks.

-

SIL and HIL simulation for logic, timing, and interface verification.

-

Vehicle testing to confirm real‑world performance and interactions.

-

Field data validation to detect long‑tail failures during customer usage.

Performance indicators—such as coverage metrics, defect discovery rate, and confidence indexes—inform release decisions. Each layer of testing contributes evidence to demonstrate system integrity in compliance audits and safety assessments.

Key Takeaways

-

Digital twins in SDVs make large‑scale virtual scenario testing realistic and repeatable.

-

Continuous verification through CI/CD pipelines reduces integration risk and defect escapes.

-

Validation blends DiL, HIL, and OTA test modes to ensure real‑world reliability.

-

Bridging the model–reality gap requires ongoing feedback from fleet and diagnostics data.

-

Combining lab, bench, simulation, and field testing builds measurable confidence for release.

-

Regulatory standards (ISO 26262, UN R155) now expect V&V to be lifecycle‑embedded, not event‑based.

Adopting digital twins in SDVs transforms verification and validation from checkpoints into a continuous learning loop. One that underwrites safety, compliance, and innovation across every connected vehicle on the road.

For more information, contact us:

The Value Transformation LLC store.

Follow us on social media at:

Amazon Author Central https://www.amazon.com/-/e/B002A56N5E

Follow us on LinkedIn: https://www.linkedin.com/in/jonmquigley/

https://www.linkedin.com/company/value-transformation-llc

Follow us on Google Scholar: https://scholar.google.com/citations?user=dAApL1kAAAAJ